The academic discipline of artificial intelligence was founded in 1956 at a research workshop at Dartmouth College, Hanover, New Hampshire (a private research institution established in 1769 by Eleazar Wheelock). Since the founding of AI, researchers in the field have raised ethical and philosophical thoughts about the nature of the human mind and their concerns regarding the creation of artificial beings with innate human intelligence. These observations of automated art date back to the automata of ancient Greek civilisation. Inventors Daedalus and Hero of Alexandria designed machines that could write text, produce sounds, and play music.

During the Roman era, Hero was a Greek mathematician and engineer in Alexandria, Egypt; respected as the greatest experimenter of antiquity, his work illustrated the Hellenistic scientific tradition. Daedalus was a brilliant architect, inventor, and sculptor who built the Labyrinth for King Minos of Crete. The ancient Greeks and Romans assigned the name ‘Labyrinth’ to a building when it was completely or partially underground, and contained a system of complex chambers, blind alleys, and passageways. Artists and researchers have used AI to create artistic works since its founding in 1956. During the 1970s, Harold Cohen produced and exhibited generative AI paintings which he created on a computer program he wrote. The Greek god of invention, Hephaestus, created the first machine; a giant, bronze robot named Talos. More than two thousand five hundred years ago, centuries before technology had any success, Ancient Greek, Roman, Indian, and Chinese mythology explored solutions to create artificial life, automata, human augmentations, self-moving devices, and replicated ancient inventions of animated machines.

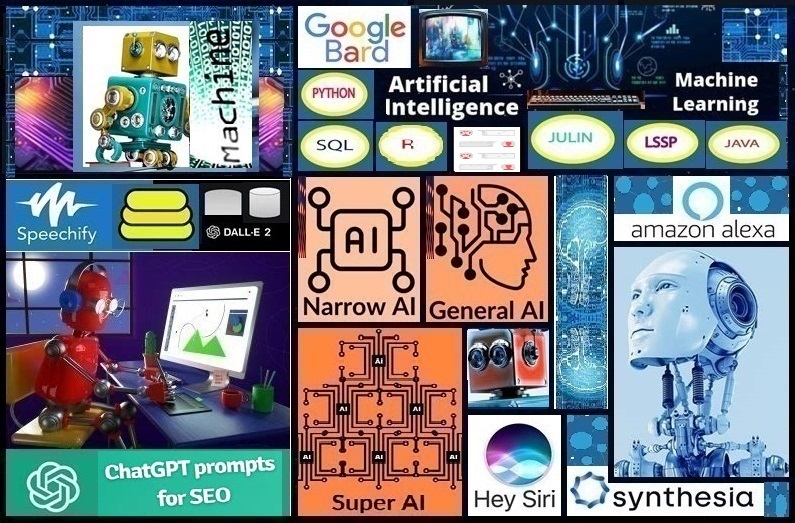

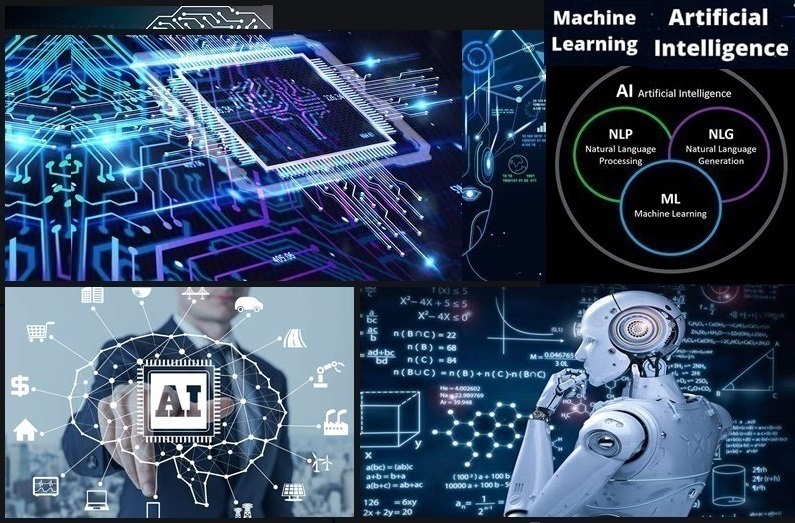

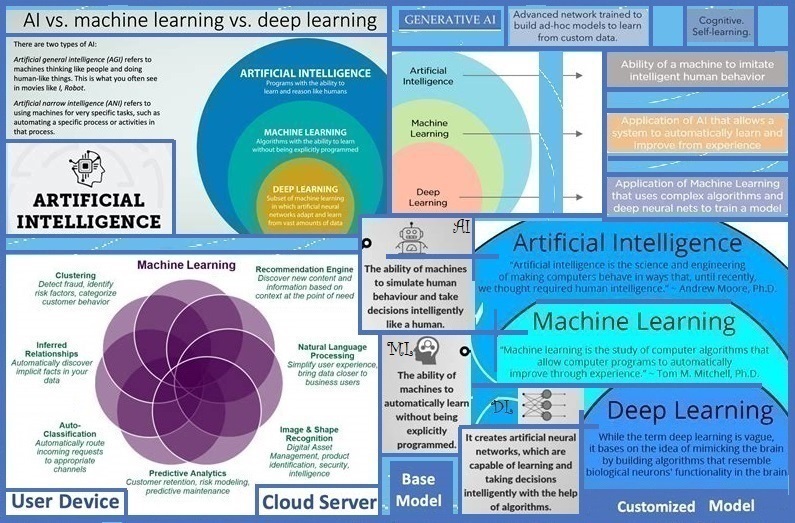

Artificial Intelligence refers to the development of computer systems that can perform tasks equivalent to human intelligence. To accomplish the best possible results when faced with difficult problems, companies combine AI with other analytical techniques such as contextual analysis, deep learning, knowledge graphs, machine learning, natural language processing, and other methods. Acknowledging that there is no all-purpose AI technique, composite AI is the amalgamation of several AI techniques to improve learning proficiency and increase knowledge interpretation. Composite AI provides a platform to combine multiple AI techniques, solve a wider challenge span, and interpret data successfully. Digital twins are virtual replicas of physical objects, processes, or systems that simulate the behaviour of the physical twin to understand how they work in real life. Digital twins are connected to real data sources in the environment which means that the twin updates in real time to replicate the original version. Digital models serve as real-world physical counterparts for simulation, monitoring, integration, maintenance, and testing.

The emergence of deep learning advanced research in image classification, natural language processing, speech recognition, and other disciplines. Machine learning (ML) is a sub-branch of AI and computer science that enables computers to ‘learn’ and improve through experience and data. ML focuses on data, algorithm models, statistical, and generative models. The machine learning model creates new, original content such as images, text, or music based on patterns and structures learned from existing data. To imitate human behaviour, the algorithm analyses data, categorises images, and predicts price variations. IBM has a rich history in machine learning. Natural language processing (NLP) is a subfield of AI, computer science, and linguistics concerned with programming computers to process and analyse substantial amounts of natural language data and the interactions between computers and human language. NLP allows machines to dissect and interpret human language. It is the main tools we use every day such as search engines, grammar correction software, translation software, spam filters, voice assistants, chatbots, and social media monitoring tools. The goal is to have a computer capable of ‘understanding’ the language of document contents, to accurately extract information and insights, and to categorise the documents.

Generative artificial intelligence (GAI) is a large sub-branch that builds on existing technologies; therefore, it rapidly developed over a brief period. GenAI identifies as a discipline that studies the ‘completely programmed’ creation of intelligence. This contrasts with contemporary AI, which studies the understanding and explanation of intelligence by humans. GenAI goes beyond ML, combining different techniques to improve AI’s versatility and efficiency. Rather than solely analysing existing information as in ML, GenAI trains models from massive amounts of data which facilitates learning patterns and structures that in turn allows generating original content. GenAI is trained on deep-learning models to acquire a set of algorithms to generate text, speech, graphics, images, ideas, conversations, simulations, audio, videos, code, structures, product designs, synthetic data, and other content from the vast amounts of data on the training model. Like all AI, generative AI is powered by large machine learning models known as Large Language Models (LLMs). Unlike traditional AI systems that are designed to recognise patterns and make predictions, GenAI uses a form of AI that engages a wide range of applications as well as deep learning algorithms; developed in 2014, generative adversarial networks (GANs) and variational autoencoders (VAEs) produced the first practical deep neural networks that could learn generative models of complex data to output complete sets of original data.

Generative AI: 1) is AI tools that rely on substantial amounts of third-party data, neural network architecture, and complex algorithms to create original content. 2) represent a concept shift in innovation, impacting enterprises that invest in AI applications. 3) use neural networks to recognise existing data patterns and generate original content. One of the breakthroughs with generative AI models is the ability to leverage different learning methods for training such as semi-supervised or unsupervised learning. 4) is used by several industries such as art, fashion, finance, gaming, healthcare, marketing, product design, software development, and script writing. While the conversation around this technology has concentrated on AI image and art generation, generative AI can do much more than generate static images from text prompts. GenAI’s transformative tools have empowered individuals and organisations to create music, art, and other forms of media effortlessly.

In 2017, the Transformer network enabled improvements in generative models that, in 2018, became known as the first Generative Pre-trained Transformer (GPT), named GPT-1. During 2019, this model was followed by GPT-2 which could generalise several unsupervised tasks as a foundation model. In 2021, OpenAI released DALL-E (a transformer-based pixel generative model). These developments marked the arrival of high-quality artificial intelligence art from natural language prompts. GPT-4 was released in March 2023.

Because of its capabilities, the technology has fascinated the virtual and real world and is evolving as a transformative innovation. A noticeable example of generative AI is predictive search; Google trains LLMs on billions of search queries made by users over the years. Large language model (LLM) chatbots are ChatGPT, Bing Chat, Bard, LLaMA, and text-to-image artificial intelligence art systems such as Stable Diffusion (an AI generated art program developed by Stability AI), Midjourney (developed in an independent research laboratory in San Francisco), and DALL-E (an AI system that can create original, realistic images and art from a short text description). As with OpenAI’s DALL-E and Stability AI’s Stable Diffusion, Midjourney creates images from natural language descriptions known as ‘prompts.’

ChatGPT is a chatbot that empowers users to seamlessly steer a conversation towards an anticipated detail level, format, language, length, and style. Bing is a web search engine owned and operated by Microsoft. The service traces its roots back to Microsoft’s earlier search engines, MSN Search, Windows Live Search, and Live Search. Bing offers a broad spectrum of search services, incorporating web, image, video, and map search products. Live Search became Bing in June 2009. Integrated directly into the search engine and based on GPT-4, Microsoft launched Bing Chat (an AI chatbot) in February 2023. The chatbot was well-received as Bing reached one hundred million active users the following month. Bard is a generative AI chatbot developed by Google AI, originally based on the LaMDA family of large language models and later the PaLM LLMs. Bard was released in March 2023 and is available in two hundred and thirty-eight countries and forty-six languages. LLaMA is a family of LLMs released by Meta AI since February 2023. For the first version of LLaMa, four model sizes were trained; seven, thirteen, thirty-three, and sixty-five billion parameters. Meta AI is an artificial intelligence research laboratory that focuses on generating knowledge for the AI community. The company’s objective is to improve augmented and artificial reality technologies and to develop several categories of artificial intelligence. Investment in generative AI surged during the early 2020s with large companies such as Microsoft, Google, and Baidu developing generative AI models to create text, images, and other media. Survey results revealed that high performance companies such as OpenAI, DeepMind, and Nvidia invested heavily in more traditional AI capabilities as well as GenAI; using reinforcement learning with human feedback to make generative AI output more reliable.

Applications such as ChatGPT and DALL-E became popular in 2022. OpenAI, the developer of the Artificial intelligence chatbot Chat Generative Pre-trained Transformer (ChatGPT), launched the large language model-based chatbot (written in Python) in November 2022. OpenAI’s DALL-E can make realistic and context-aware edits, insert, remove, or retouch specific sections of an image from a natural language description. Its flexibility allows users to create and edit original images, from artistic to photorealistic images. DALL-E excels at natural language descriptions; users can simply describe what they want to see. More than one and a half million users such as artists, architects, authors, and creative directors are actively creating more than two million images per day with DALL-E. Artificial intelligence researchers describe DALL-E as a neural network; a mathematical system freely modelled on the network of neurons in the brain.

Keeping in mind that the output depends on what the AI was trained on, Apple capitalised in generative AI to improve their Siri, Messages and Apple Music products. If it was trained on text, the output will be text such as in the large language models. Algorithms and models such as OpenAI’s ChatGPT (which is a chatbot on top of a large language model) can be prompted to generate several types of content. GenAI stimulated several start-ups and encouraged major investments by Amazon, Google, and Microsoft with the induction of technologies such as ChatGPT, Bard, and Dall -E. AI Writing for content generation, AI Art, and AI Video are GenAI technology applications that use deep learning algorithms to create new content from current data. OpenAI’s ChatGPT and Google’s Bard rely on statistical likelihood. They are great tools that can help with several tasks such as data analysis, learning, teaching, and writing.

Generative AI is the latest innovation in the artificial intelligence industry. It is one of the most powerful artificial intelligence technologies. The potential misuse of GenAI to manipulate or deceive people include fake news and cybercrime. GenAI’s ability to create realistic fake content has been exploited in cybercrime phishing scams. Audio and video have been used to spread disinformation and commit fraud. Cybercriminals have created LLMs such as FraudGPT and WormGPT to focus on fraud. Individuals, businesses, and governments have raised concerns. In the European Union, the proposed Artificial Intelligence Act includes the obligation to disclose copyrighted material used to train generative AI systems, and to label any AI-generated output. In the United States, a group of companies (including OpenAI, Meta, and Alphabet) signed a voluntary agreement in July 2023 to watermark AI-generated content. On 10 July 2023, the Cyberspace Administration of China (CAC), joined by six other central government regulators, issued a finalised text of Interim Measures for the Management of Generative Artificial Intelligence Services such as ChatGPT to the public of mainland China. It contains restrictions on personal data collection, regulations on training data and label quality, requirements to watermark generated images and videos, and guidelines for generative AI to ‘adhere to socialist core values.’ They are set to take effect on 15 August 2023.

On 2 October 2015, the restructuring of Google created Alphabet Inc. (headquartered in Mountain View, California) as Google’s public holding company; thus, Alphabet became the parent company of several former Google group companies. These companies now had greater independence to operate in businesses other than internet services. Alphabet has developed into one of the world’s most valuable companies; it is the world’s third-largest technology company by revenue. Along with Microsoft, Amazon, Apple, and Meta it is acknowledged as one of the Big Five American information technology companies. OpenAI is an American artificial intelligence research organisation and consists of the non-profit OpenAI Inc. registered in Delaware and its for-profit subsidiary corporation OpenAI Global LLC. The company was founded in 2015 by Andrej Karpathy, Durk Kingma, Greg Brockman, Ilya Sutskever, Jessica Livingston, John Schulman, Pamela Vagata, Trevor Blackwell, Vicki Cheung, and Wojciech Zaremba. Elon Musk and Sam Altman served as the original board members. Its headquarters are in San Francisco, California. The company’s products are GPT-1, GPT-2, GPT-3, GPT-4, ChatGPT, DALL·E, OpenAI Five, and OpenAI Codex. In 2019, Microsoft provided OpenAI Global LLC with a one-billion-dollar investment and in 2023 with a ten-billion-dollar investment.

Elon Musk describes AI as humanity’s ‘biggest existential threat.’ Sam Altman believes Artificial General Intelligence (AGI) will surpass human intelligence; AGI must not be confused with Generative Artificial Intelligence (GAI). Artificial General Intelligence (AGI), or strong AI, is AI that reveals human-like intelligence and is ‘generally smarter than humans.’ It would be a machine that could understand the world as well as any human and be able to learn how to carry out a vast number of tasks. The definition is debatable, but it’s mainly understood as something scientific and proportionally more advanced than we presently have. Altman and Musk have stated that they are partly motivated by concerns about AI safety and the existential risk that AGI poses. OpenAI’s stance is that ‘it’s hard to fathom how much human-level AI could benefit society’; likewise, it is difficult to understand ‘how much it could damage society if used incorrectly.’ Scientists Stuart Russell and Stephen Hawking have expressed concerns that when advanced AI gains the ability to re-design itself at an ever-increasing speed, an inevitable ‘intelligence explosion’ could result in human extinction.

GenAI’s ability to create new and unique content will eventually impact industries and unlock exciting opportunities in several fields such as art, entertainment, design, marketing, and social sciences. It has already shown immense potential in enhancing medical imaging like data augmentation, image synthesis, image-to-image translation, and radiology report generation. The rapid development in GenAI can play a significant role in employee training and development via personalised learning programs and adapting training paths based on individual needs. The technology will be able to analyse employees’ skills, interests, and professional development needs. Furthermore, it has the potential to transform government business processes, change how state employees perform their work and improve government efficiency. Recent developments offer transformative change across various sections such as scientific research, education, healthcare, and medicine. Generative AI can shape the future in countless ways, whether through creating new forms of art and expression, making investment decisions, or improving health care outcomes, the possibilities are endless.

GenAI exposed the complexity of the calculations required to successfully leverage AI to the next level. Although generative AI is still far from Artificial General Intelligence, the estimation is that by 2025, about ten percent of all data will be the result of Generative Artificial Intelligence creations.