From time to time we participate in experimental systems projects development. This time we made an AI-system pilot. We want to tell you how everything happened and what we achieved.

Machine training and occupational safety in the industry

At any industrial facility, personnel is monitored in some way for compliance with safety regulations and the use of personal protective equipment. A lot of effort is now being put into transferring routine industrial control functions to artificial intelligence systems. Taking into account the level of task complexity, companies often carry out large-scale open research projects to study the possibilities of AI.

The First Line Software team took part in one of these projects.

Task

It consists of implementing artificial intelligence using already existing CCTV-cameras at customer’s facilities, which will capture the video stream from the cameras, highlight production zones, people, security elements areas in the stream, and determine whether safety regulations are violated or complied with.

Process

First Line Software engineers have created a convolution neural network and taught it to recognize on streaming video people and equipment details, for instance: hard hats, vests, and cables, as well as to identify the types of production zones. In the pilot version, the system captures and reacts to the three most common scenarios of personnel behavior:

- Whether an employee wears a safety hard hat on his head, which is a prerequisite at work;

- Whether an employee wears a work jacket hood over a hard hat, which is strictly prohibited;

- Whether an employee is cable fastened, which is a prerequisite for high-rise work.

Dataset

A frequent problem with machine learning projects in the industry is the lack of initial templates for neural network training due to the novelty of the topic and the singularity of implementations. We had to develop and mark from scratch a reference dataset, which included 56 sequences covering positive and negative scenarios of personnel behavior in the workplace.

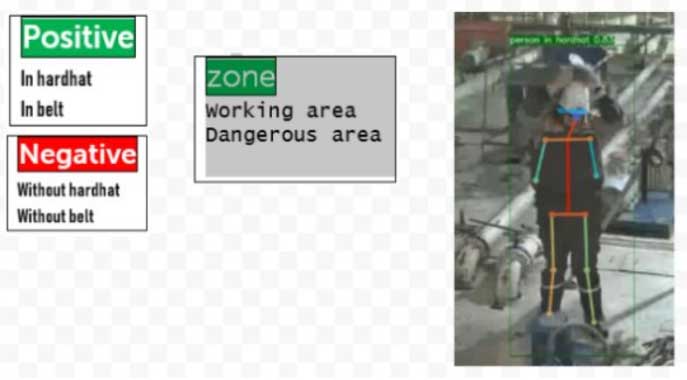

The images show the company’s employees, some of whom are equipped according to all safety rules, and some of them are equipped with their violations. Each person has a model of the skeleton with 12 control points. The equipment is marked with additional points. Each frame has a text signature and a color frame. Types of production zones are also marked.

Overview of annotated classes and example of transformation of an object into a skeleton model by control points

Object recognition

The video stream is processed in three stages. First, the frames are filtered out if there are no people on them. Then the parts of the video in which the system has recognized people are transferred to the convolution neural network. The network identifies the person by the markup and recognizes the elements of the safeguarding: a hard had put on the head or a cable belting the torso. Then the algorithm, using the reference vector method, compares the object image with the templates base. If the frame contains safety violations, the system sends a notification in accordance with the prescribed requirements.

Technology

Mask R-CNNN (Detectron platform) was used for the segmentation of images. This framework manages the task of detecting all specified classes of objects and also selects objects in the frame. Neural network training was performed with the help of the Transfer Learning script, which is optimal when you work with a limited dataset and there is no task to collect statistics on the work, for instance: how many employees are at the object, which departments they belong to and at which spots they spend more time.

Result

In the final version, we managed to achieve stable video stream analytics with object recognition and behavior classification. The accuracy range is 77-100%. Our pilot showed excellent results at the testing stage and now the customer continues the tests. We will go on following the development because the path from pilot to industrial solution is very long.